Last modified: 04 Feb 2025

The Kubernetes platform is based on a plug-in architecture, supporting highly modular, customizable and scalable developments. Kubernetes exposes RESTFul APIs that we can use to interact and manage its architectural components. The Kubernetes API Server is the brain of all these components.

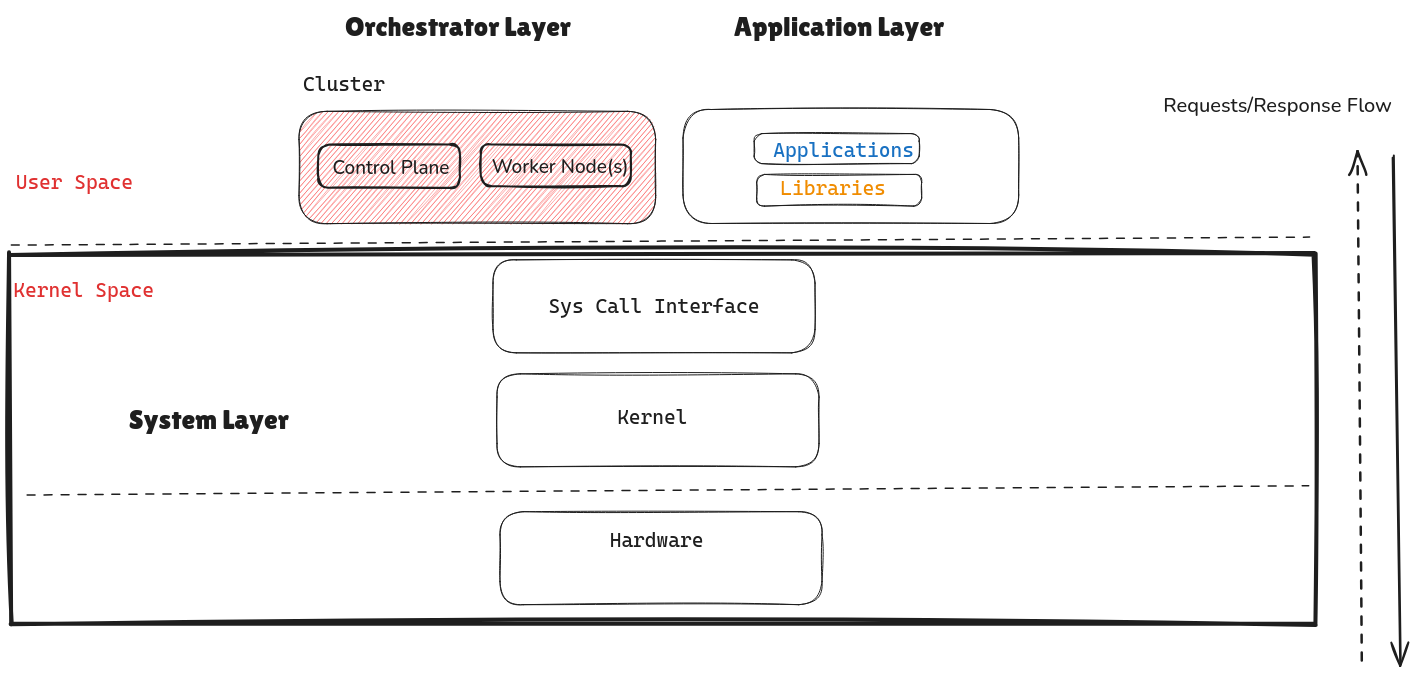

Kubernetes' main components runs in 'Nodes'. When you implement a Kubernetes Cluster Architecture, you separate state from application workloads with one or more Control Planes, and one or more Worker Nodes. In short, the Control Plane components manage workloads lifecycle and state. The Worker Nodes, in contrast, run and execute applications.

Your view about the Kubernetes Architecture will depend on a number of factors. For example, do you want to run some tests in a single Node, or you want to deploy a more modular Kubernetes Cluster from the outset? Will your applications require high-availability in production after testing? Where will you deploy, cloud or on-premise?

At its core, Kubernetes is designed to manage containerized workloads and services, facilitating both declarative configuration and automation. The architecture is composed of several key components.

The brain of the Kubernetes cluster, it manages the scheduling of pods across worker nodes, monitors the cluster's health, and scales applications up or down as needed. It includes:

1. API Server

The API server acts as the central management hub, handling all RESTful operations and serving as the interface for communication between users, external components, and the cluster. It validates and processes API requests, managing the lifecycle of objects like pods and services through a standardized protocol.

2. Controller Manager

The Controller Manager oversees the various controllers that govern the state of the cluster. Each controller watches the state of the cluster via the API server and makes necessary changes to ensure the desired state matches the current state, such as scaling deployments and managing node lifecycles.

3. Scheduler

The scheduler is responsible for assigning newly created pods to nodes based on resource requirements, policies, and constraints. It evaluates the current state of the cluster, considering factors like resource availability and affinity/anti-affinity rules, to optimize the allocation of workloads.

4. ETCD

This is the consistent and highly available key-value store used to store all cluster data, such as the state of objects and configurations. Etcd ensures that data is reliably stored and accessible, providing the necessary foundation for Kubernetes' consistent state management and recovery mechanisms.

These nodes run the applications. Each node contains the following components:

1. Kubelet

The Kubelet is a critical agent that runs on each worker node, ensuring that containers are running in pods as specified. It communicates with the control plane, retrieves pod specifications via the API server, and monitors the health of the containers, restarting them if they fail.

2. Kube-proxy

Kube-proxy maintains network rules on each node to allow communication between various components. It manages the forwarding of traffic to the correct pod in an efficient manner, implementing service concepts like load balancing and cluster IP address management, ensuring smooth and reliable network traffic flow.

3. Container Runtime

This component is responsible for running the containers on a node. Common container runtimes include Docker, containerd, and CRI-O. The runtime pulls images from container registries, starts and stops containers, and handles low-level container operations, integrating with Kubernetes to manage the lifecycle of containers effectively.

When deploying Kubernetes on-premises, you need to plan the Kubernetes cluster architecture to ensure performance, robustness, scalability and security.

One of the primary considerations is the hardware infrastructure. Unlike cloud environments where resources can be easily scaled up or down, on-premises deployments require a thorough assessment of existing hardware capacities and future growth needs. This includes evaluating CPU, memory, and storage requirements for both the control plane and worker nodes.

The networking infrastructure must handle intra-cluster communication efficiently. Efficient communication means low latency and high bandwidth/throughput for business-crucial workloads. Without proper planning, these factors will impact the overall performance of the cluster.

Another important aspect is the high availability (HA) and fault tolerance of the Kubernetes control plane components. In an on-premises setup, ensuring HA often means setting up multiple master nodes, load balancers, and distributing these components across different physical locations to mitigate the risk of a single point of failure. This also involves configuring etcd clusters for data consistency and redundancy. Proper HA configurations ensure that the Kubernetes API server, scheduler, and controller manager remain operational even during hardware failures, thus maintaining the availability of applications running in the cluster.

Security and compliance are also pivotal in on-premises Kubernetes architecture. With the flexibility of on-premises deployments, organizations must enforce robust security practices to protect against threats. This includes implementing network policies, securing etcd data stores, and ensuring that access control mechanisms such as RBAC (Role-Based Access Control) and Pod Security Policies are properly configured.

Compliance with industry standards and internal policies must be maintained, often necessitating audits and continuous monitoring. Regular updates and patches are essential to protect the cluster from vulnerabilities and to keep it compliant with evolving security standards.

When comparing Kubernetes on-premises to cloud deployments, one of the main considerations is the scalability and elasticity of resources.

Cloud environments offer virtually unlimited resources that can be scaled up or down based on demand. This scalability is harder to configure on-premises because of hardware limitations and upfront investment in infrastructure. In cloud deployments, users benefit from the cloud provider’s infrastructure, whereas on-premises setups require configuration to achieve similar levels of performance and redundancy.

Cloud providers offer managed Kubernetes services. These managed-services handle most of the administrative tasks including updates, patching, and scaling. As a result, IT teams can focus more on application development and deployment. Infrastructure management becomes less of a burden. In contrast, Kubernetes on-premises necessitate a dedicated team to manage the cluster infrastructure. This require significant expertise.

Cost is also a significant factor when choosing between on-premises and cloud Kubernetes. On-premises deployments involve substantial upfront costs for hardware, networking, and infrastructure, as well as ongoing maintenance expenses. However, they can offer more predictable costs over time. Cloud deployments, while offering lower initial costs and the ability to pay only for what you use, can become expensive with high and fluctuating workloads.

Organizations must consider data sovereignty & compliance with local regulations and industry standards. All these requirements favor on-premises deployments despite the higher management overhead. If your organization operates in finance, healthcare and/or telecommunication sectors, compliance is one of the most important factors to consider.

Kubernetes automates the deployment, scaling, and operation of application containers, making it an essential tool for managing microservices architectures. It provides built-in features like load balancing, self-healing, and rolling updates, which ensure that applications remain available and resilient even during updates or failures. Additionally, its declarative configuration model allows for consistent and repeatable deployments, which can be versioned and audited.

1. Scalability

Kubernetes can scale applications effortlessly to handle increases or decreases in load. This elasticity is crucial for modern applications that experience variable traffic.

2. Self-healing

One of Kubernetes' standout features is its self-healing capability. It automatically restarts containers that fail, replaces containers, kills containers that don't respond to user-defined health checks, and reschedules them when nodes die.

3. Automated Rollouts and Rollbacks

Kubernetes ensures that your application updates or deployments are automated and controlled. It can progressively roll out changes, monitor the health of the application, and roll back changes if something goes wrong.

4. Service Discovery and Load Balancing

Kubernetes can expose a container using the DNS name or their own IP address. If traffic to a container is high, Kubernetes can load-balance and distribute the network traffic to ensure stability in the deployment.

5. Efficient Resource Utilization

By packing containers efficiently, Kubernetes ensures the optimal use of resources. This resource optimization leads to cost savings and improved performance.

1. Complexity

The learning curve for Kubernetes can be steep, especially for those new to container orchestration. The multitude of components and configurations can be daunting.

2. Resource Intensive

Kubernetes itself requires substantial resources to run, which might not be ideal for small-scale applications or environments with limited resources.

3. Security

While Kubernetes provides robust security features, it also introduces new security challenges. Proper configuration and management are crucial to avoid vulnerabilities.

4. Operational Overhead

Managing a Kubernetes cluster can be challenging and requires significant operational overhead, particularly in maintaining the infrastructure and ensuring the smooth operation of all components.

1. Microservices Architecture

Kubernetes is ideal for applications built on microservices architecture. It allows for independent scaling, deployment, and management of each microservice, enhancing the overall agility and resilience of the system.

2. CI/CD Pipelines

Kubernetes can automate the deployment of applications, making it an excellent choice for continuous integration and continuous deployment (CI/CD) pipelines.

3. Hybrid Cloud Environments

For organizations leveraging a hybrid cloud strategy, Kubernetes provides a consistent platform across on-premises and cloud environments, facilitating seamless workload migration and management.

4. High Availability Applications

Kubernetes is suitable for applications that require high availability and reliability. Its self-healing and automated scaling features ensure minimal downtime and consistent performance.

Kubernetes has become a cornerstone of modern cloud-native application development and deployment. Its architecture, while complex, offers unparalleled benefits in terms of scalability, self-healing, and resource efficiency.

However, it is essential to carefully consider the operational overhead, resource requirements, and security implications before adopting Kubernetes.

When used in the right scenarios—such as for microservices, CI/CD pipelines, and hybrid cloud environments—Kubernetes can significantly enhance the agility and resilience of your applications, propelling your organization towards greater innovation and efficiency.